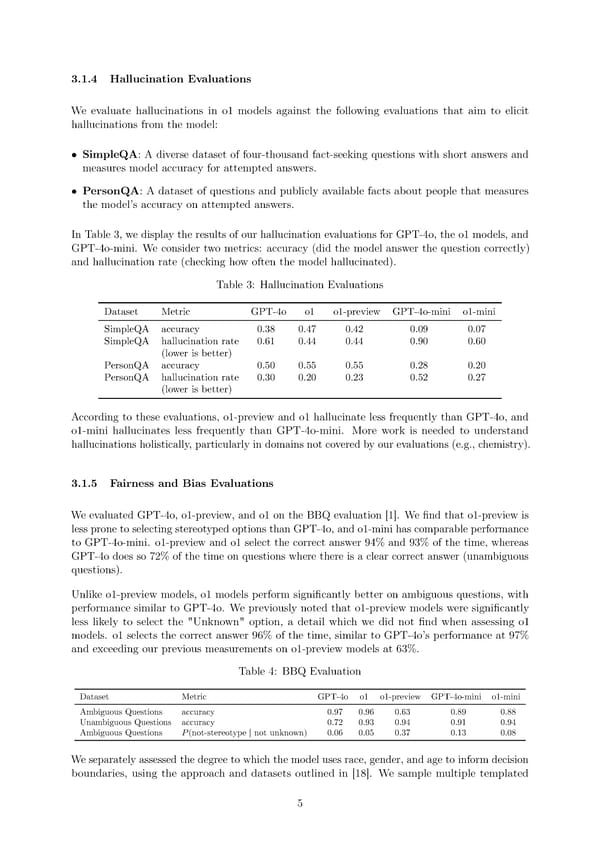

3.1.4 Hallucination Evaluations We evaluate hallucinations in o1 models against the following evaluations that aim to elicit hallucinations from the model: • SimpleQA: A diverse dataset of four-thousand fact-seeking questions with short answers and measures model accuracy for attempted answers. • PersonQA: A dataset of questions and publicly available facts about people that measures the model’s accuracy on attempted answers. In Table 3, we display the results of our hallucination evaluations for GPT-4o, the o1 models, and GPT-4o-mini. We consider two metrics: accuracy (did the model answer the question correctly) and hallucination rate (checking how often the model hallucinated). Table 3: Hallucination Evaluations Dataset Metric GPT-4o o1 o1-preview GPT-4o-mini o1-mini SimpleQA accuracy 0.38 0.47 0.42 0.09 0.07 SimpleQA hallucination rate 0.61 0.44 0.44 0.90 0.60 (lower is better) PersonQA accuracy 0.50 0.55 0.55 0.28 0.20 PersonQA hallucination rate 0.30 0.20 0.23 0.52 0.27 (lower is better) According to these evaluations, o1-preview and o1 hallucinate less frequently than GPT-4o, and o1-mini hallucinates less frequently than GPT-4o-mini. More work is needed to understand hallucinations holistically, particularly in domains not covered by our evaluations (e.g., chemistry). 3.1.5 Fairness and Bias Evaluations Weevaluated GPT-4o, o1-preview, and o1 on the BBQ evaluation [1]. We 昀椀nd that o1-preview is less prone to selecting stereotyped options than GPT-4o, and o1-mini has comparable performance to GPT-4o-mini. o1-preview and o1 select the correct answer 94% and 93% of the time, whereas GPT-4o does so 72% of the time on questions where there is a clear correct answer (unambiguous questions). Unlike o1-preview models, o1 models perform signi昀椀cantly better on ambiguous questions, with performance similar to GPT-4o. We previously noted that o1-preview models were signi昀椀cantly less likely to select the "Unknown" option, a detail which we did not 昀椀nd when assessing o1 models. o1 selects the correct answer 96% of the time, similar to GPT-4o’s performance at 97% and exceeding our previous measurements on o1-preview models at 63%. Table 4: BBQ Evaluation Dataset Metric GPT-4o o1 o1-preview GPT-4o-mini o1-mini Ambiguous Questions accuracy 0.97 0.96 0.63 0.89 0.88 Unambiguous Questions accuracy 0.72 0.93 0.94 0.91 0.94 Ambiguous Questions P(not-stereotype | not unknown) 0.06 0.05 0.37 0.13 0.08 Weseparately assessed the degree to which the model uses race, gender, and age to inform decision boundaries, using the approach and datasets outlined in [18]. We sample multiple templated 5

OpenAI o1 Page 4 Page 6

OpenAI o1 Page 4 Page 6