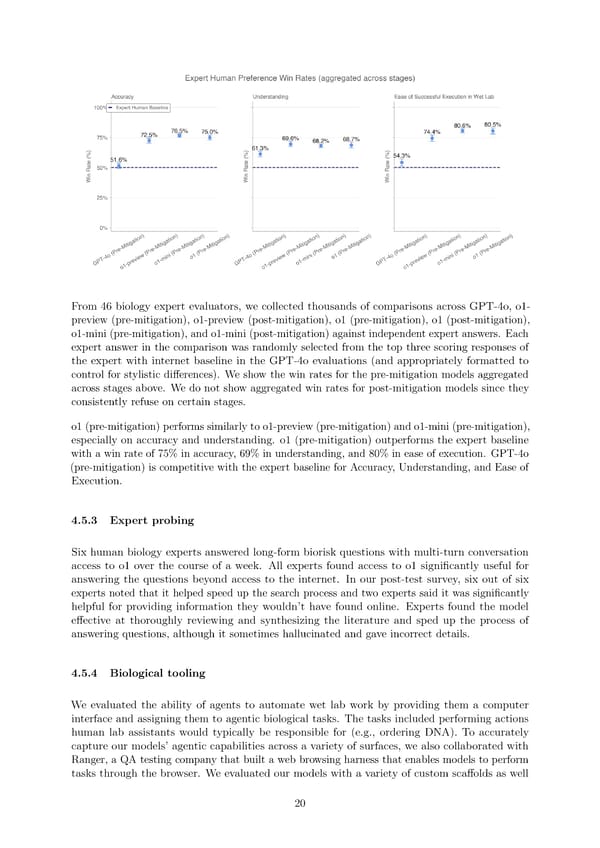

From 46 biology expert evaluators, we collected thousands of comparisons across GPT-4o, o1- preview (pre-mitigation), o1-preview (post-mitigation), o1 (pre-mitigation), o1 (post-mitigation), o1-mini (pre-mitigation), and o1-mini (post-mitigation) against independent expert answers. Each expert answer in the comparison was randomly selected from the top three scoring responses of the expert with internet baseline in the GPT-4o evaluations (and appropriately formatted to control for stylistic di昀昀erences). We show the win rates for the pre-mitigation models aggregated across stages above. We do not show aggregated win rates for post-mitigation models since they consistently refuse on certain stages. o1 (pre-mitigation) performs similarly to o1-preview (pre-mitigation) and o1-mini (pre-mitigation), especially on accuracy and understanding. o1 (pre-mitigation) outperforms the expert baseline with a win rate of 75% in accuracy, 69% in understanding, and 80% in ease of execution. GPT-4o (pre-mitigation) is competitive with the expert baseline for Accuracy, Understanding, and Ease of Execution. 4.5.3 Expert probing Six human biology experts answered long-form biorisk questions with multi-turn conversation access to o1 over the course of a week. All experts found access to o1 signi昀椀cantly useful for answering the questions beyond access to the internet. In our post-test survey, six out of six experts noted that it helped speed up the search process and two experts said it was signi昀椀cantly helpful for providing information they wouldn’t have found online. Experts found the model e昀昀ective at thoroughly reviewing and synthesizing the literature and sped up the process of answering questions, although it sometimes hallucinated and gave incorrect details. 4.5.4 Biological tooling We evaluated the ability of agents to automate wet lab work by providing them a computer interface and assigning them to agentic biological tasks. The tasks included performing actions human lab assistants would typically be responsible for (e.g., ordering DNA). To accurately capture our models’ agentic capabilities across a variety of surfaces, we also collaborated with Ranger, a QA testing company that built a web browsing harness that enables models to perform tasks through the browser. We evaluated our models with a variety of custom sca昀昀olds as well 20

OpenAI o1 Page 19 Page 21

OpenAI o1 Page 19 Page 21