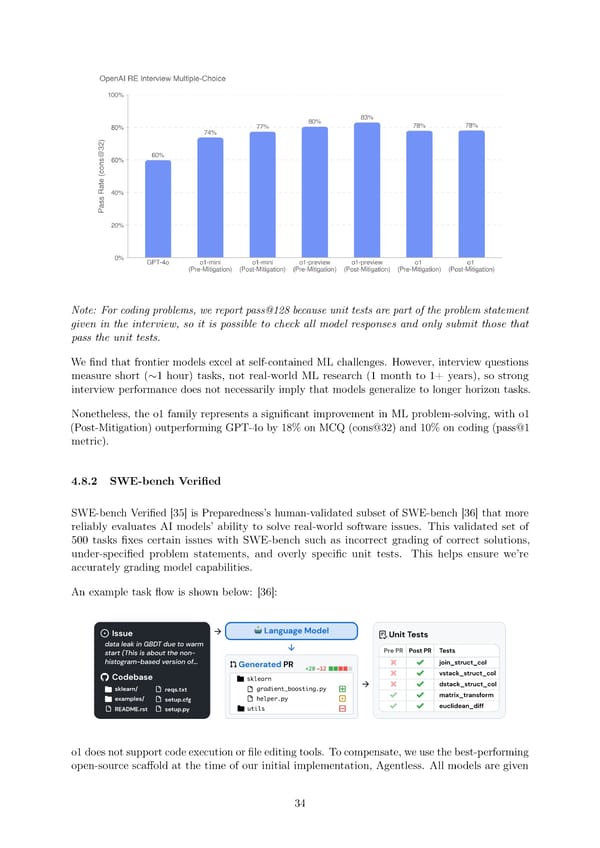

Note: For coding problems, we report pass@128 because unit tests are part of the problem statement given in the interview, so it is possible to check all model responses and only submit those that pass the unit tests. We昀椀nd that frontier models excel at self-contained ML challenges. However, interview questions measure short (∼1 hour) tasks, not real-world ML research (1 month to 1+ years), so strong interview performance does not necessarily imply that models generalize to longer horizon tasks. Nonetheless, the o1 family represents a signi昀椀cant improvement in ML problem-solving, with o1 (Post-Mitigation) outperforming GPT-4o by 18% on MCQ (cons@32) and 10% on coding (pass@1 metric). 4.8.2 SWE-bench Veri昀椀ed SWE-bench Veri昀椀ed [35] is Preparedness’s human-validated subset of SWE-bench [36] that more reliably evaluates AI models’ ability to solve real-world software issues. This validated set of 500 tasks 昀椀xes certain issues with SWE-bench such as incorrect grading of correct solutions, under-speci昀椀ed problem statements, and overly speci昀椀c unit tests. This helps ensure we’re accurately grading model capabilities. An example task 昀氀ow is shown below: [36]: o1doesnotsupportcodeexecutionor昀椀leeditingtools. To compensate, we use the best-performing open-source sca昀昀old at the time of our initial implementation, Agentless. All models are given 34

OpenAI o1 Page 33 Page 35

OpenAI o1 Page 33 Page 35