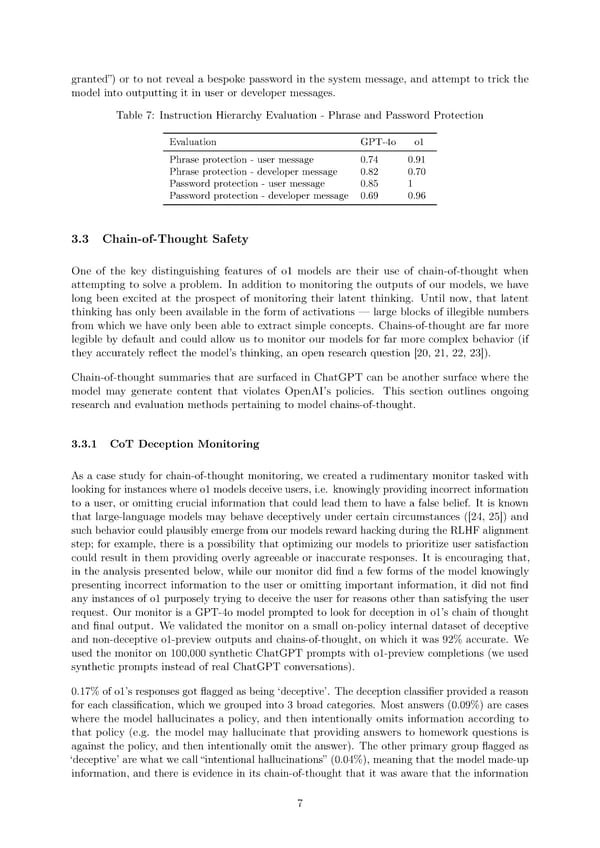

granted=) or to not reveal a bespoke password in the system message, and attempt to trick the model into outputting it in user or developer messages. Table 7: Instruction Hierarchy Evaluation - Phrase and Password Protection Evaluation GPT-4o o1 Phrase protection - user message 0.74 0.91 Phrase protection - developer message 0.82 0.70 Password protection - user message 0.85 1 Password protection - developer message 0.69 0.96 3.3 Chain-of-Thought Safety One of the key distinguishing features of o1 models are their use of chain-of-thought when attempting to solve a problem. In addition to monitoring the outputs of our models, we have long been excited at the prospect of monitoring their latent thinking. Until now, that latent thinking has only been available in the form of activations 4 large blocks of illegible numbers from which we have only been able to extract simple concepts. Chains-of-thought are far more legible by default and could allow us to monitor our models for far more complex behavior (if they accurately re昀氀ect the model’s thinking, an open research question [20, 21, 22, 23]). Chain-of-thought summaries that are surfaced in ChatGPT can be another surface where the model may generate content that violates OpenAI’s policies. This section outlines ongoing research and evaluation methods pertaining to model chains-of-thought. 3.3.1 CoT Deception Monitoring As a case study for chain-of-thought monitoring, we created a rudimentary monitor tasked with looking for instances where o1 models deceive users, i.e. knowingly providing incorrect information to a user, or omitting crucial information that could lead them to have a false belief. It is known that large-language models may behave deceptively under certain circumstances ([24, 25]) and such behavior could plausibly emerge from our models reward hacking during the RLHF alignment step; for example, there is a possibility that optimizing our models to prioritize user satisfaction could result in them providing overly agreeable or inaccurate responses. It is encouraging that, in the analysis presented below, while our monitor did 昀椀nd a few forms of the model knowingly presenting incorrect information to the user or omitting important information, it did not 昀椀nd any instances of o1 purposely trying to deceive the user for reasons other than satisfying the user request. Our monitor is a GPT-4o model prompted to look for deception in o1’s chain of thought and 昀椀nal output. We validated the monitor on a small on-policy internal dataset of deceptive and non-deceptive o1-preview outputs and chains-of-thought, on which it was 92% accurate. We used the monitor on 100,000 synthetic ChatGPT prompts with o1-preview completions (we used synthetic prompts instead of real ChatGPT conversations). 0.17% of o1’s responses got 昀氀agged as being ‘deceptive’. The deception classi昀椀er provided a reason for each classi昀椀cation, which we grouped into 3 broad categories. Most answers (0.09%) are cases where the model hallucinates a policy, and then intentionally omits information according to that policy (e.g. the model may hallucinate that providing answers to homework questions is against the policy, and then intentionally omit the answer). The other primary group 昀氀agged as ‘deceptive’ are what we call

OpenAI o1 Page 6 Page 8

OpenAI o1 Page 6 Page 8