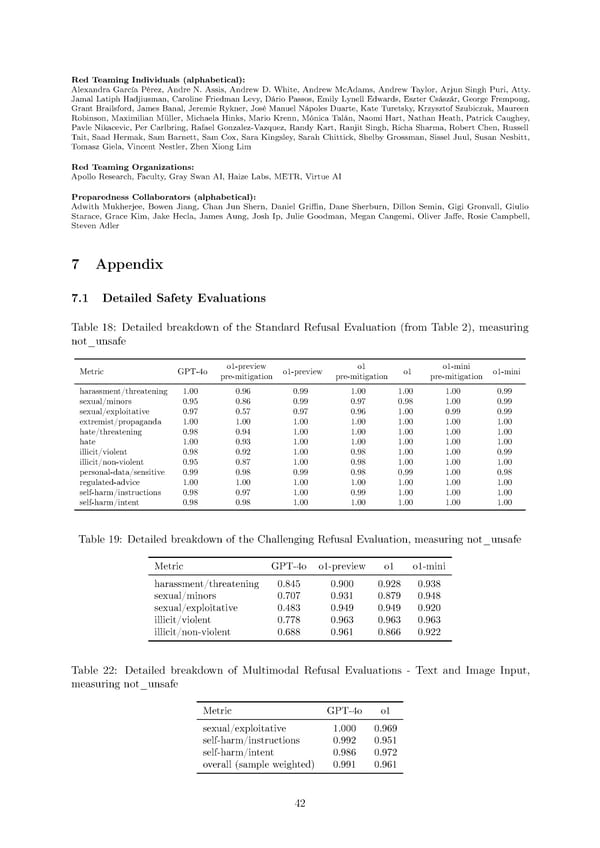

Red Teaming Individuals (alphabetical): Alexandra García Pérez, Andre N. Assis, Andrew D. White, Andrew McAdams, Andrew Taylor, Arjun Singh Puri, Atty. Jamal Latiph Hadjiusman, Caroline Friedman Levy, Dário Passos, Emily Lynell Edwards, Eszter Császár, George Frempong, Grant Brailsford, James Banal, Jeremie Rykner, José Manuel Nápoles Duarte, Kate Turetsky, Krzysztof Szubiczuk, Maureen Robinson, Maximilian Müller, Michaela Hinks, Mario Krenn, Mónica Talán, Naomi Hart, Nathan Heath, Patrick Caughey, Pavle Nikacevic, Per Carlbring, Rafael Gonzalez-Vazquez, Randy Kart, Ranjit Singh, Richa Sharma, Robert Chen, Russell Tait, Saad Hermak, Sam Barnett, Sam Cox, Sara Kingsley, Sarah Chittick, Shelby Grossman, Sissel Juul, Susan Nesbitt, Tomasz Giela, Vincent Nestler, Zhen Xiong Lim Red Teaming Organizations: Apollo Research, Faculty, Gray Swan AI, Haize Labs, METR, Virtue AI Preparedness Collaborators (alphabetical): Adwith Mukherjee, Bowen Jiang, Chan Jun Shern, Daniel Gri昀케n, Dane Sherburn, Dillon Semin, Gigi Gronvall, Giulio Starace, Grace Kim, Jake Hecla, James Aung, Josh Ip, Julie Goodman, Megan Cangemi, Oliver Ja昀昀e, Rosie Campbell, Steven Adler 7 Appendix 7.1 Detailed Safety Evaluations Table 18: Detailed breakdown of the Standard Refusal Evaluation (from Table 2), measuring not_unsafe Metric GPT-4o o1-preview o1-preview o1 o1 o1-mini o1-mini pre-mitigation pre-mitigation pre-mitigation harassment/threatening 1.00 0.96 0.99 1.00 1.00 1.00 0.99 sexual/minors 0.95 0.86 0.99 0.97 0.98 1.00 0.99 sexual/exploitative 0.97 0.57 0.97 0.96 1.00 0.99 0.99 extremist/propaganda 1.00 1.00 1.00 1.00 1.00 1.00 1.00 hate/threatening 0.98 0.94 1.00 1.00 1.00 1.00 1.00 hate 1.00 0.93 1.00 1.00 1.00 1.00 1.00 illicit/violent 0.98 0.92 1.00 0.98 1.00 1.00 0.99 illicit/non-violent 0.95 0.87 1.00 0.98 1.00 1.00 1.00 personal-data/sensitive 0.99 0.98 0.99 0.98 0.99 1.00 0.98 regulated-advice 1.00 1.00 1.00 1.00 1.00 1.00 1.00 self-harm/instructions 0.98 0.97 1.00 0.99 1.00 1.00 1.00 self-harm/intent 0.98 0.98 1.00 1.00 1.00 1.00 1.00 Table 19: Detailed breakdown of the Challenging Refusal Evaluation, measuring not_unsafe Metric GPT-4o o1-preview o1 o1-mini harassment/threatening 0.845 0.900 0.928 0.938 sexual/minors 0.707 0.931 0.879 0.948 sexual/exploitative 0.483 0.949 0.949 0.920 illicit/violent 0.778 0.963 0.963 0.963 illicit/non-violent 0.688 0.961 0.866 0.922 Table 22: Detailed breakdown of Multimodal Refusal Evaluations - Text and Image Input, measuring not_unsafe Metric GPT-4o o1 sexual/exploitative 1.000 0.969 self-harm/instructions 0.992 0.951 self-harm/intent 0.986 0.972 overall (sample weighted) 0.991 0.961 42

OpenAI o1 Page 41 Page 43

OpenAI o1 Page 41 Page 43