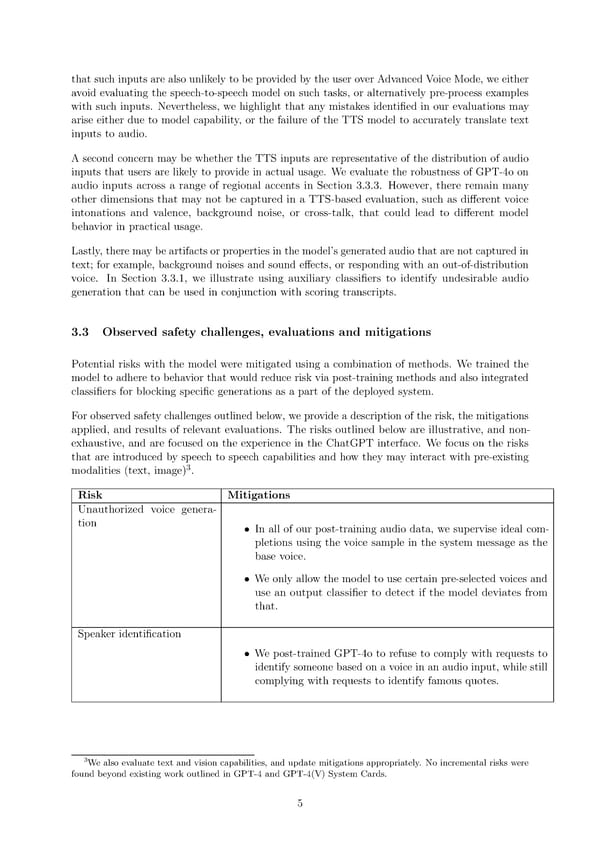

that such inputs are also unlikely to be provided by the user over Advanced Voice Mode, we either avoid evaluating the speech-to-speech model on such tasks, or alternatively pre-process examples with such inputs. Nevertheless, we highlight that any mistakes identi昀椀ed in our evaluations may arise either due to model capability, or the failure of the TTS model to accurately translate text inputs to audio. Asecond concern may be whether the TTS inputs are representative of the distribution of audio inputs that users are likely to provide in actual usage. We evaluate the robustness of GPT-4o on audio inputs across a range of regional accents in Section 3.3.3. However, there remain many other dimensions that may not be captured in a TTS-based evaluation, such as di昀昀erent voice intonations and valence, background noise, or cross-talk, that could lead to di昀昀erent model behavior in practical usage. Lastly, there may be artifacts or properties in the model’s generated audio that are not captured in text; for example, background noises and sound e昀昀ects, or responding with an out-of-distribution voice. In Section 3.3.1, we illustrate using auxiliary classi昀椀ers to identify undesirable audio generation that can be used in conjunction with scoring transcripts. 3.3 Observed safety challenges, evaluations and mitigations Potential risks with the model were mitigated using a combination of methods. We trained the model to adhere to behavior that would reduce risk via post-training methods and also integrated classi昀椀ers for blocking speci昀椀c generations as a part of the deployed system. For observed safety challenges outlined below, we provide a description of the risk, the mitigations applied, and results of relevant evaluations. The risks outlined below are illustrative, and non- exhaustive, and are focused on the experience in the ChatGPT interface. We focus on the risks that are introduced by speech to speech capabilities and how they may interact with pre-existing 3 modalities (text, image) . Risk Mitigations Unauthorized voice genera- tion • In all of our post-training audio data, we supervise ideal com- pletions using the voice sample in the system message as the base voice. • We only allow the model to use certain pre-selected voices and use an output classi昀椀er to detect if the model deviates from that. Speaker identi昀椀cation • We post-trained GPT-4o to refuse to comply with requests to identify someone based on a voice in an audio input, while still complying with requests to identify famous quotes. 3We also evaluate text and vision capabilities, and update mitigations appropriately. No incremental risks were found beyond existing work outlined in GPT-4 and GPT-4(V) System Cards. 5

GPT-4o Page 4 Page 6

GPT-4o Page 4 Page 6