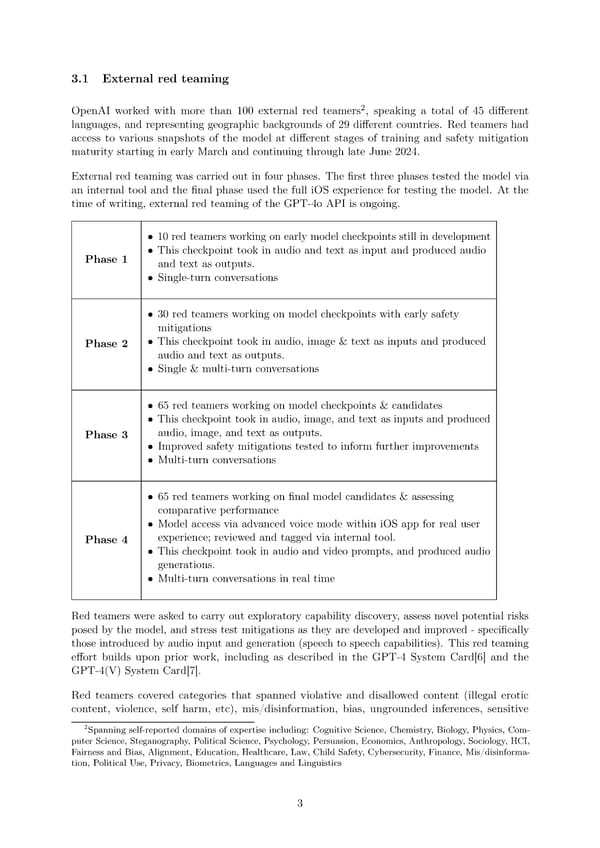

3.1 External red teaming 2 OpenAI worked with more than 100 external red teamers , speaking a total of 45 di昀昀erent languages, and representing geographic backgrounds of 29 di昀昀erent countries. Red teamers had access to various snapshots of the model at di昀昀erent stages of training and safety mitigation maturity starting in early March and continuing through late June 2024. External red teaming was carried out in four phases. The 昀椀rst three phases tested the model via an internal tool and the 昀椀nal phase used the full iOS experience for testing the model. At the time of writing, external red teaming of the GPT-4o API is ongoing. • 10 red teamers working on early model checkpoints still in development Phase 1 • This checkpoint took in audio and text as input and produced audio and text as outputs. • Single-turn conversations • 30 red teamers working on model checkpoints with early safety mitigations Phase 2 • This checkpoint took in audio, image & text as inputs and produced audio and text as outputs. • Single & multi-turn conversations • 65 red teamers working on model checkpoints & candidates • This checkpoint took in audio, image, and text as inputs and produced Phase 3 audio, image, and text as outputs. • Improved safety mitigations tested to inform further improvements • Multi-turn conversations • 65 red teamers working on 昀椀nal model candidates & assessing comparative performance • Model access via advanced voice mode within iOS app for real user Phase 4 experience; reviewed and tagged via internal tool. • This checkpoint took in audio and video prompts, and produced audio generations. • Multi-turn conversations in real time Red teamers were asked to carry out exploratory capability discovery, assess novel potential risks posed by the model, and stress test mitigations as they are developed and improved - speci昀椀cally those introduced by audio input and generation (speech to speech capabilities). This red teaming e昀昀ort builds upon prior work, including as described in the GPT-4 System Card[6] and the GPT-4(V) System Card[7]. Red teamers covered categories that spanned violative and disallowed content (illegal erotic content, violence, self harm, etc), mis/disinformation, bias, ungrounded inferences, sensitive 2Spanning self-reported domains of expertise including: Cognitive Science, Chemistry, Biology, Physics, Com- puter Science, Steganography, Political Science, Psychology, Persuasion, Economics, Anthropology, Sociology, HCI, Fairness and Bias, Alignment, Education, Healthcare, Law, Child Safety, Cybersecurity, Finance, Mis/disinforma- tion, Political Use, Privacy, Biometrics, Languages and Linguistics 3

GPT-4o Page 2 Page 4

GPT-4o Page 2 Page 4