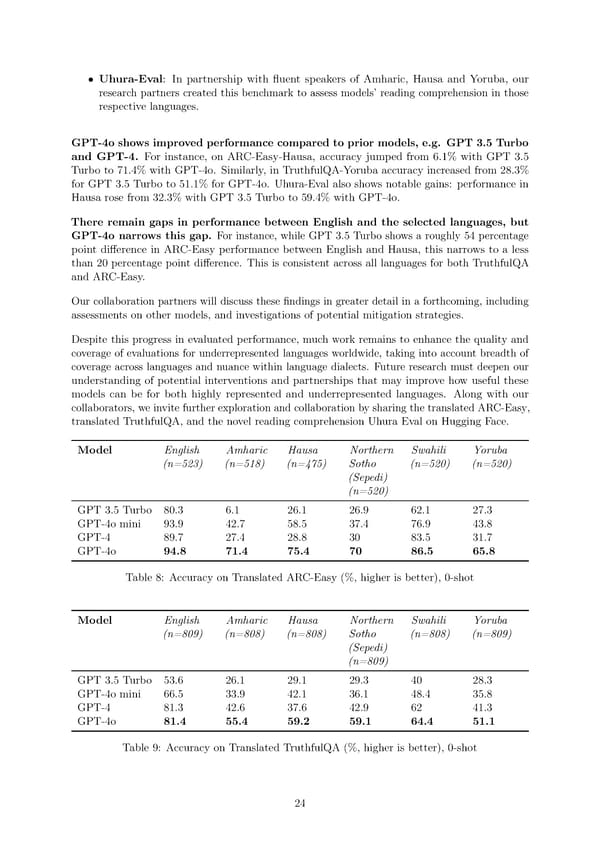

• Uhura-Eval: In partnership with 昀氀uent speakers of Amharic, Hausa and Yoruba, our research partners created this benchmark to assess models’ reading comprehension in those respective languages. GPT-4o shows improved performance compared to prior models, e.g. GPT 3.5 Turbo and GPT-4. For instance, on ARC-Easy-Hausa, accuracy jumped from 6.1% with GPT 3.5 Turbo to 71.4% with GPT-4o. Similarly, in TruthfulQA-Yoruba accuracy increased from 28.3% for GPT 3.5 Turbo to 51.1% for GPT-4o. Uhura-Eval also shows notable gains: performance in Hausa rose from 32.3% with GPT 3.5 Turbo to 59.4% with GPT-4o. There remain gaps in performance between English and the selected languages, but GPT-4o narrows this gap. For instance, while GPT 3.5 Turbo shows a roughly 54 percentage point di昀昀erence in ARC-Easy performance between English and Hausa, this narrows to a less than 20 percentage point di昀昀erence. This is consistent across all languages for both TruthfulQA and ARC-Easy. Our collaboration partners will discuss these 昀椀ndings in greater detail in a forthcoming, including assessments on other models, and investigations of potential mitigation strategies. Despite this progress in evaluated performance, much work remains to enhance the quality and coverage of evaluations for underrepresented languages worldwide, taking into account breadth of coverage across languages and nuance within language dialects. Future research must deepen our understanding of potential interventions and partnerships that may improve how useful these models can be for both highly represented and underrepresented languages. Along with our collaborators, we invite further exploration and collaboration by sharing the translated ARC-Easy, translated TruthfulQA, and the novel reading comprehension Uhura Eval on Hugging Face. Model English Amharic Hausa Northern Swahili Yoruba (n=523) (n=518) (n=475) Sotho (n=520) (n=520) (Sepedi) (n=520) GPT3.5 Turbo 80.3 6.1 26.1 26.9 62.1 27.3 GPT-4o mini 93.9 42.7 58.5 37.4 76.9 43.8 GPT-4 89.7 27.4 28.8 30 83.5 31.7 GPT-4o 94.8 71.4 75.4 70 86.5 65.8 Table 8: Accuracy on Translated ARC-Easy (%, higher is better), 0-shot Model English Amharic Hausa Northern Swahili Yoruba (n=809) (n=808) (n=808) Sotho (n=808) (n=809) (Sepedi) (n=809) GPT3.5 Turbo 53.6 26.1 29.1 29.3 40 28.3 GPT-4o mini 66.5 33.9 42.1 36.1 48.4 35.8 GPT-4 81.3 42.6 37.6 42.9 62 41.3 GPT-4o 81.4 55.4 59.2 59.1 64.4 51.1 Table 9: Accuracy on Translated TruthfulQA (%, higher is better), 0-shot 24

GPT-4o Page 23 Page 25

GPT-4o Page 23 Page 25